I’ve been spending a lot of time thinking about how new forms of AI and machine learning will shape society over the next decade. One area I’ve gone deep on is how the widespread manipulation and generation of digital assets will manifest itself in our daily lives.

My main takeaway in my research thus far is this:

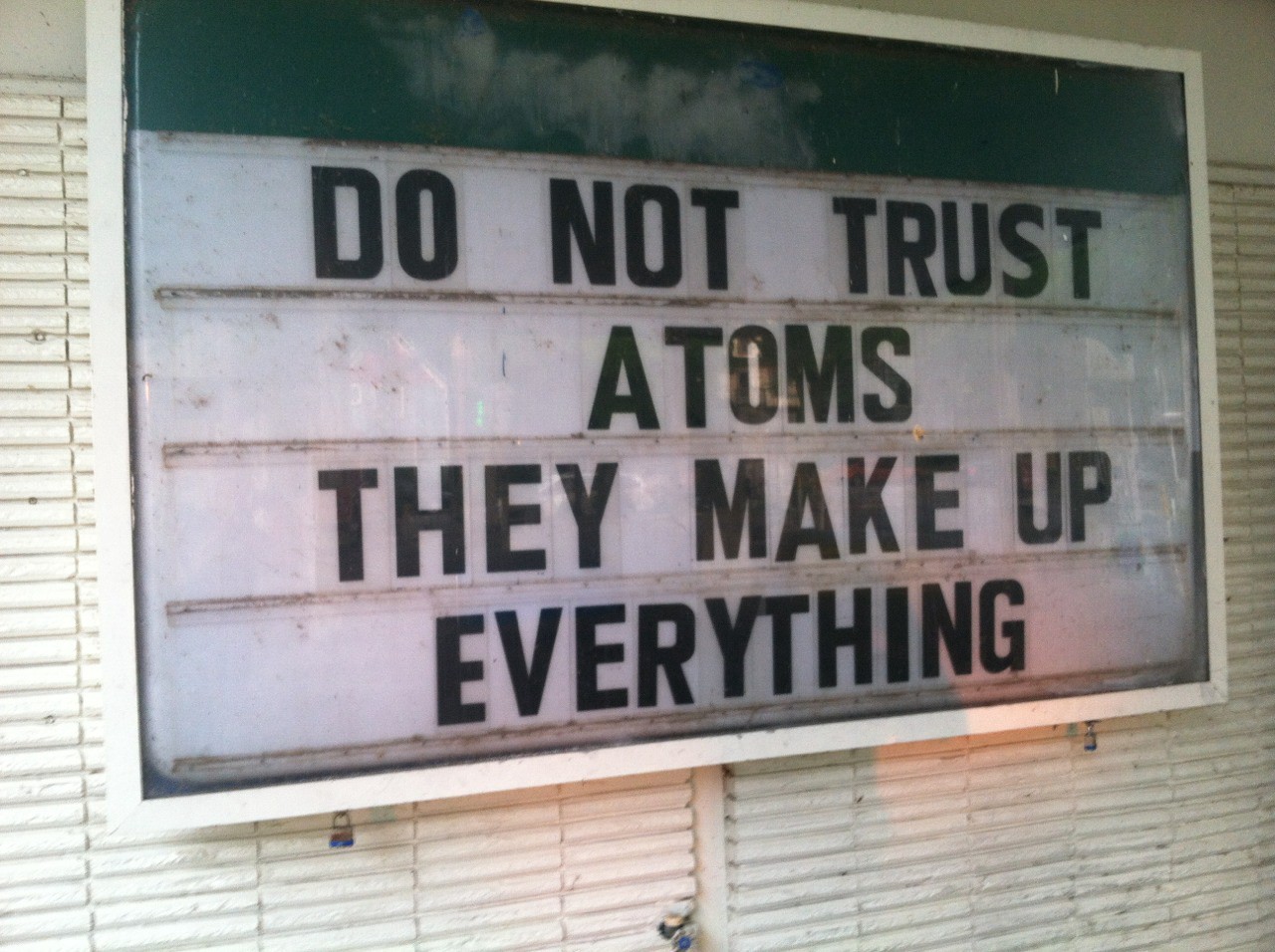

We are moving from a default trust society to a default skeptic society. Or put another way, while today humans largely trust what they hear/see, tomorrow they will not.

How did skepticism creep into society today?

In my lifetime I’ve seen this shift towards skepticism take place. ~10 years ago, people believed what they could see in relative high fidelity and what they were told in major media outlets. Photoshop made people skeptical of incomprehensible images. Smartphones, social media, and internet publishing has given to the rise of clickbait and “fake news” making society in 2018 increasingly more skeptical about internet content (and the platforms that push it) than in 2017.

This started with the rise of creative editing tools, has moved to a data-driven understanding of human beings and how they consume information, and ends with how machine learning upgrades the scalability in creating and manipulating all types of visual and audio assets.

But today seeing is still believing and we trust certain sources to continue to report the truth and we default believe them.

What happens when that trust starts to erode?

What happens once the first person/group displays the power of scalable and believable fake audio or video as a weapon? We recently saw an early sign of this with DeepFake, a technology used to superimpose celebrities’ faces in porn (among other things).

The result? The internet collectively freaked out and multiple platforms took an uncharacteristically censorship-heavy approach, quickly banning the content. And while deepfakes often manipulate something as sacred as sex acts, the targeting of celebrities in our sex-desensitized world makes me believe that the potential impact on society technologies like this could have will not be realized by similar manipulations, but instead with what could happen next.

DeepFake and whatever follows it will plant a seed of doubt and skepticism in people’s minds and destroy the idea that you “have to see it to believe it”. But only once false assets are used for something as meaningful as setting off some sort of widespread trigger across financial markets or government agencies, or when they change the outcome of something as large as an election (again?), will that seed blossom into a mindset shift for society.

Eventually this could trickle down from “important to verify” facts to day-to-day conversations. As a generation that records everything we do in order to create social proof, will our existing digital footprints become worthless as quickly as our feeds became “valuable”? Will the rebirth of trust in these types of assets come from a return to analog mediums?

Perhaps the idea of “real versus not” will mean less when our worlds are already being overrun by multiple realities and we are hopping between physical and digital worlds.

These are all interesting exercises to think through, but a lot of how I look at the world as an investor as well as a human opining on the future is through inflection points. While I’m not confident in what will be the catalysts for this particular case, I’m fairly confident that we are fast approaching an inflection point in how humans internally compute all new information. From there we have to figure out whether the power of shared beliefs and incentives can keep us a default trust society, or if a new wave of technology will make us default skeptics.

Recent Comments